Serverless Computing Benefits: Transforming Cost-Efficient, Scalable Application Development

Estimated reading time: 9 minutes

Key Takeaways

- Pay-as-you-go pricing ensures you only pay for consumed resources.

- Automatic scaling eliminates manual server management.

- Development teams can focus on code rather than infrastructure overhead.

- Event-driven architecture enhances responsiveness and flexibility.

- Substantial cost optimization is possible for workloads with varying traffic.

Table of contents

- Understanding Serverless Architecture

- Key Benefits of Serverless Computing

- Core Serverless Platforms

- Event-Driven Applications in Serverless

- Cost Optimization Strategies in Serverless

- Use Cases and Success Stories

- Challenges and Considerations

- Conclusion

- Additional Resources

- Frequently Asked Questions

In today’s competitive digital landscape, businesses are constantly searching for ways to optimize their technology infrastructure.

Serverless computing benefits have emerged as a game-changing approach for organizations looking to maximize efficiency while minimizing costs. This cloud computing model abstracts infrastructure management completely, allowing developers to focus solely on writing code while the cloud provider handles all server-related concerns.

The serverless computing benefits extend far beyond simple convenience, offering a transformative path to cost-efficient, highly scalable application development. Unlike traditional server models, serverless computing enables businesses to pay only for the actual compute resources used, eliminating wasted capacity and unnecessary expenses. This approach has gained remarkable traction in recent years as companies recognize how

serverless computing benefits their bottom line and development agility.

Understanding Serverless Architecture

What Is Serverless Architecture?

Serverless architecture represents a cloud computing execution model where the provider dynamically manages the allocation and provisioning of servers. Despite the name, servers still exist—the difference is that developers no longer need to worry about server management tasks. Applications are built as collections of functions deployed to a cloud provider and executed on-demand when triggered by specific events.

The cloud provider automatically handles:

- Server provisioning

- Maintenance tasks

- Automatic scaling

- Resource allocation

- Security patching

Learn more about the evolution of serverless architecture in this comparison of

serverless computing vs. cloud computing and

serverless vs. containers.

How Serverless Differs from Traditional Models

Traditional cloud setups demand manual configuration and resource provisioning. Developers often over-provision to handle potential spikes, leading to idle resource costs. In contrast, serverless architecture:

- Requires zero server management

- Scales automatically to match traffic

- Charges solely for execution time

- Executes code only when triggered by events

This hands-off infrastructure approach frees development teams to focus on business logic, accelerating innovation and reducing overhead.

Scalability and Flexibility Advantages

One of the most powerful aspects of serverless architecture is its inherent scalability:

- Instant scaling during traffic surges

- Automatic scale-down during quieter periods

- Consistent performance regardless of load

- No need for complex autoscaling configurations

Behind the scenes, the cloud provider adjusts resources based on real-time demand. This elasticity enables businesses to handle unpredictable workloads efficiently, without complex capacity planning. Explore more in our

hybrid cloud migration strategy guide and this deep dive on

scaling new heights with serverless computing.

Key Benefits of Serverless Computing

Let’s delve deeper into some of the most impactful serverless computing benefits.

Cost Optimization

Among the most compelling advantages is the revolutionary approach to cost management:

- Zero idle capacity costs

- Billing based on actual execution time

- Automatic scaling avoids paying for unused servers

For instance, AWS Lambda charges only for compute time in milliseconds. This pay-as-you-go model can dramatically reduce expenses for workloads with fluctuating traffic patterns. Learn more about these

top benefits and potential disadvantages.

Scalability and Flexibility

Serverless platforms automatically support thousands of concurrent executions within seconds:

- Instant elasticity for unpredictable workloads

- No capacity planning overhead

- High availability, thanks to multi-zone distribution

This flexibility eliminates much of the maintenance required with traditional server-based or container-based systems.

Check out scaling new heights with serverless computing and

serverless computing benefits for more insights.

Faster Time-to-Market

By abstracting away infrastructure management, serverless computing significantly accelerates development cycles:

- Reduced operational overhead

- Streamlined deployments through automated CI/CD

- Independent function updates without redeploying the entire app

Developers can iterate and release features quicker, which is essential in fast-paced markets. Get more details on automated pipelines in our

CI/CD pipeline automation guide and learn how

serverless computing integrates with modern identity platforms.

Core Serverless Platforms

While numerous providers offer serverless solutions, two major platforms dominate: AWS Lambda and Azure Functions.

AWS Lambda

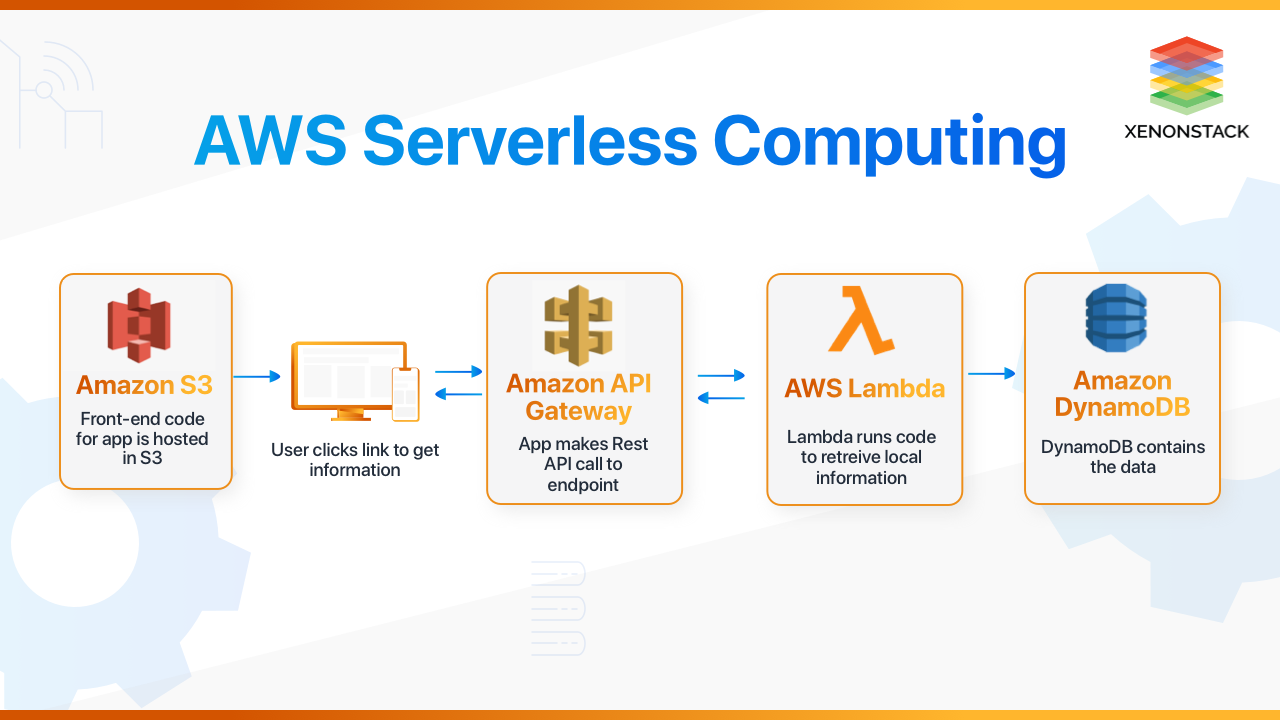

AWS Lambda introduced the Function-as-a-Service (FaaS) concept and remains the most widely used platform.

Key Features:

- Supports various coding languages (Node.js, Python, Java, Go, etc.)

- Deep AWS service and third-party integrations

- Fault tolerance via multi-zone distribution

- Monitoring through CloudWatch

- Fine-grained IAM security controls

Common Use Cases:

- Real-time file processing or transformation

- IoT backend services

- Mobile and web app backends

- Scheduled data processing tasks

- Stream processing for Kinesis events

Learn about how Lambda powers event-driven architectures in this

detailed guide and from

Akamai’s serverless computing overview.

Azure Functions

Microsoft’s Azure Functions provides similar functionality but with tight integration into the Azure ecosystem.

Key Features:

- Multiple language support (C#, JavaScript, Python, Java, PowerShell)

- Trigger/binding model for integrations

- Built-in Auth and Authorization

- Durable Functions for stateful workflows

Common Use Cases:

- Real-time data processing with other Azure services

- Office 365 and Dynamics 365 automated workflows

- IoT data ingestion and transformation

- Image or media processing

- Scheduled batch tasks

For a deeper comparison, see

this blog and additional

serverless computing benefits.

Comparative Analysis (AWS Lambda vs. Azure Functions)

When choosing between these two platforms, consider:

- Pricing Models: Both use consumption-based billing. AWS charges per request and ms, while Azure does similarly based on execution time and memory.

- Ecosystem Integration: Lambda integrates deeply with AWS services, whereas Azure Functions shines in Microsoft-centric environments.

- Developer Experience: Both are straightforward. Azure’s Visual Studio integration can appeal to .NET shops; AWS has a broader community ecosystem.

For a comprehensive look, consult

this deep dive and

this overview.

Event-Driven Applications in Serverless

What Makes Applications Event-Driven?

Event-driven applications run code in response to specific triggers:

- HTTP API requests

- Database writes or updates

- File uploads or modifications

- IoT device messages

- Message queue events

- Scheduled cron tasks

This perfectly aligns with serverless, as resources remain dormant until needed.

Real-World Event-Driven Use Cases

Serverless excels in scenarios such as:

- Real-time data processing: Streams from IoT devices, social media, or user interactions

- Automated multi-step workflows

- Loose coupling for microservices through event triggers

- On-the-fly content transformation like generating image thumbnails

Dive deeper into event-driven serverless patterns at

Okta’s guide to serverless computing and

Appvia’s scaling insights, as well as this

future of serverless apps discussion.

Cost Optimization Strategies in Serverless

While serverless inherently offers cost advantages, strategic optimization efforts can yield additional savings.

Function Optimization Techniques

To fine-tune function execution and reduce costs:

- Adjust memory allocation for the right balance of performance vs. cost

- Minimize execution time by optimizing code

- Reduce package size to lessen cold start delays

- Leverage caching to avoid repetitive computation

See more best practices in our article on

infrastructure as code with Terraform.

Resource Management Best Practices

Beyond function-level tuning:

- Use concurrency limits to control costs from spikes

- Analyze usage patterns to spot waste

- Set proper function timeouts

- Leverage provisioned concurrency for critical, steady-traffic functions

Cost Monitoring Tools

Maintain visibility into usage and costs with:

- AWS Cost Explorer or Azure Cost Management

- Detailed function-level logging and metrics

- Third-party cost management solutions

- Alerts and notifications for unexpected cost spikes

Learn more about strategies in

Akamai’s serverless overview and this

in-depth look at serverless future.

Use Cases and Success Stories

Retail Industry Transformation

A major retailer used AWS Lambda to handle holiday-season traffic spikes:

- Traffic soared by 400% in peak seasons

- Migrated from fixed servers to microservices on Lambda

- Reduced infrastructure costs by 30%

- Eliminated performance degradation during spikes

Get details on

scaling with serverless to meet unpredictable demand.

SMB Workflow Automation

A mid-sized financial services firm automated document processing with serverless:

- Bottlenecks due to manual tasks

- Transitioned to event-driven serverless functions

- Cut processing time from days to minutes

- Reduced operational costs by 40%

- Improved accuracy and compliance

Learn more about how

serverless computing benefits streamline operations.

Challenges and Considerations

Despite its advantages, serverless computing is not without trade-offs. Organizations should account for these factors:

Cold Start Latency

Functions invoked after inactivity may face a “cold start,” causing slower first response times. Mitigation strategies include:

- Function warming with scheduled pings

- Provisioned concurrency for critical paths

- Optimized code and package size

- Choosing runtimes with faster initialization

Vendor Lock-in Concerns

Adopting proprietary features can complicate future migrations. Reduce lock-in by:

- Using infrastructure-as-code for portability

- Abstracting provider-specific code where possible

- Exploring multi-cloud frameworks

- Regularly reviewing platform dependencies

See our

hybrid cloud migration strategy to learn more.

Monitoring and Debugging Complexity

Serverless systems can make end-to-end tracing difficult:

- Distributed functions complicate request tracking

- Infrastructural invisibility reduces direct control

- Logging must be standardized

- Correlation IDs often required for multi-function calls

Specialized monitoring solutions and best practices help maintain observability. For further insights, see

Akamai’s blog and

this deep dive.

Conclusion

Serverless computing benefits describe a fundamental shift in building, deploying, and scaling applications. By removing server management concerns and embracing usage-based costs, teams can:

- Optimize spending with a pay-for-what-you-use model

- Scale instantly in response to real-time demand

- Accelerate development through streamlined processes

- Adopt event-driven architectures with minimal overhead

Though challenges like cold starts and vendor lock-in exist, the serverless model has continued to mature with new solutions and best practices. Organizations seeking cost optimization, rapid iteration, and simplified operations should evaluate how serverless computing aligns with their technical and business objectives.

Additional Resources

For further reading on key concepts, refer to the following:

- AWS Lambda Documentation – Official reference for setup, triggers, best practices

- Azure Functions Documentation – In-depth Microsoft serverless documentation

- Top Benefits and Disadvantages of Serverless Computing – Comprehensive third-party analysis

- Scaling New Heights with Serverless Computing – Advanced scaling discussions

- Okta’s Guide to Serverless Computing – Deep dive into event-driven models

Frequently Asked Questions

-

What is the core advantage of serverless computing?

The primary advantage is focusing solely on application code while the cloud provider handles server provisioning, maintenance, and scaling. This effectively reduces operational overhead and ensures you only pay for actual usage.

-

Can serverless computing truly reduce costs for all workloads?

While serverless often lowers costs for variable or unpredictable traffic, constantly running high-throughput applications might benefit from other models. It largely depends on workload patterns and performance requirements.

-

How can businesses mitigate cold starts?

Techniques like function warming (periodic triggers), using provisioned concurrency, and optimizing package sizes can reduce cold start latency significantly.